2017-Multimedia-Satellite-Task

What is this task about?

The multimedia satellite task requires participants to retrieve and link multimedia content from social media streams (Flickr, Twitter, Wikipedia) of events that can be remotely sensed such as flooding, fires, land clearing, etc. to satellite imagery. The purpose of this task is to augment events that are present in satellite images with social media reports in order to provide a more comprehensive view. This is of vital importance in context of situational awareness and emergency response for the coordination of rescue efforts.

To align with recent events, the challenge focuses on flooding events, which constitute a special kind of remotely sensed event. The multimedia satellite task is a combination of satellite image processing, social media retrieval and fusion of both modalities. The different challenges are addressed in the following two subtasks.

-

Disaster Image Retrieval from Social Media [DIRSM]

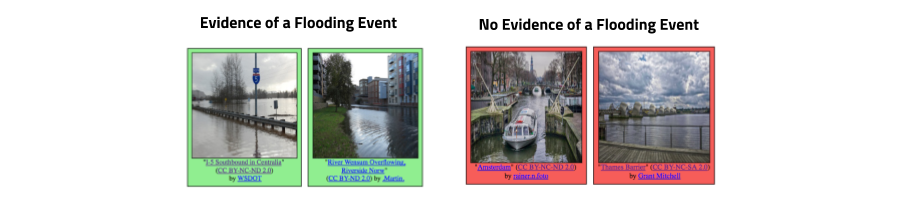

The goal of this task is to retrieve all images which show direct evidence of a flooding event from social media streams, independently of a particular event. The objective is to design a system/algorithm that given any collection of multimedia images and their metadata (e.g., YFCC100M, Twitter, Wikipedia, news articles) is able to identify those images that are related to a flooding event. Please note, that only those images which convey an evidence of a flooding event will be considered as True Positives. Specifically, we define images showing „unexpected high water levels in industrial, residential, commercial and agricultural areas“ as images providing evidence of a flooding event. The main challenges of this task are the proper discrimination of the water level in different areas (e.g., images showing a lake vs. showing high water at a street) as well as the consideration of different types of flooding events (e.g., coastal flooding, river flooding, pluvial flooding).

-

Flood-Detection in Satellite Images [FDSI]

The aim of this task is to develop a method/algorithm that is able to identify regions affected by a flooding in satellite images. Participants get image patches of satellite images which cover a wide spatial area for multiple instances of flooding events. The satellite image patches are provided by the organizers of the task and are recorded during (or shortly after) the flooding event. For a list of flooding events at different locations, participants report for given image patches segmentation masks of the flooded area. The percentage of correctly labeled pixels will be evaluated.

What data is provided?

The images and corresponding metadata for the DIRSM task have been extracted from YFCC100M-Dataset. These images are shared under Creative Commons licenses that allow their redistribution. All images are sampled in a way such that there is only one image per user in the dataset. Images will be labeled with the two classes (1) Showing evidence of a flooding event and (2) Showing no evidence of a flooding event. In addition to the images, we will also supply participants with additional metadata information. We will release a development set of 5280 images. Precomputed features will be provided along with the dataset to help teams from different communities to participate to the task.

For the FDSI task, we will provide satellite image patches of flooded regions recorded during (or shortly after) a flooding event. The dataset will contain image patches for different instances of flooding events. The patches have been extracted from Planet’s four band satellites (3 meter per pixel resolution) gathered from Planet [7] as underlying source of data. For each satellite image patch, we provide a segmentation mask, in which each pixel contains a class label for background or the flooded area.

How is the performance measured?

The images for the DIRSM task have been manually annotated with the two class labels (showing evidence/showing no evidence of a flooding event) by human assessors. The correctness of retrieved images will be evaluated with the metric Average Precision at X (AP@X) at various cutoffs, X={50,100, 200, 300, 400, 500}. The metric measures the number of relevant images among the top X retrieved results and takes the rank into consideration.

The segmentation masks of flooded areas in the satellite images for the FDSI task have been extracted by human assessors. The official evaluation metric for the generated segmentation masks of flooded areas in the satellite image patches is the Jaccard Index, commonly known as the PASCAL VOC intersection-over-union metric:

IoU = TP / (TP + FP + FN)

where TP, FP, and FN are the numbers of true positive, false positive, and false negative pixels, respectively, determined over the whole test set. The evaluation is based on two test-sets:

- Evaluation on unseen patches which are extracted from the same region as in the dev-set

- Evaluation on unseen patches which are extracted from a new region not being present in the dev-set.

What are important dates for Task-Participation?

| May 1, 2017 | Development data release |

| June 1, 2017 | Test data release |

| August 17, 2017 | Run submission due |

| August 21, 2017 | Results returned to the participants |

| August 28, 2017 | Working notes paper initial submission deadline |

| August 30, 2017 | Working notes review returned to the participants |

| September 4, 2017 | Camera ready working notes paper due |

| September 13-15, 2017 | MediaEval Workshop, Dublin, Ireland |

Who are the task organizers?

- Benjamin Bischke, German Research Center for Artificial Intelligence (DFKI), Germany (first.last at dfki.de)

- Damian Borth, German Research Center for Artificial Intelligence (DFKI), Germany (first.last at dfki.de)

- Christian Schulze, German Research Center for Artificial Intelligence (DFKI), Germany (first.last at dfki.de)

- Venkat Srinivasan, Virginia Tech (Blacksburg VA), US

- Alan Woodley, Queensland University of Technology (QUT), Australia

Where to get more information?

Detailed information on the task including description of the data, provided features, run submission, etc. can be found on the task wiki. If you need help or have any questions please contact Benjamin Bischke (firstname.lastname at dfki.de).

What are recommended readings and task references?

-

Bischke, Benjamin, et al. “Contextual enrichment of remote-sensed events with social media streams.” Proceedings of the 2016 ACM on Multimedia Conference. ACM, 2016.

-

Chaouch, Naira, et al. “A synergetic use of satellite imagery from SAR and optical sensors to improve coastal flood mapping in the Gulf of Mexico.” Hydrological Processes 26.11 (2012): 1617-1628.

-

Klemas, Victor. “Remote sensing of floods and flood-prone areas: an overview.” Journal of Coastal Research 31.4 (2014): 1005-1013.

-

Lagerstrom, Ryan, et al. “Image classification to support emergency situation awareness.” Frontiers in Robotics and AI 3 (2016): 54.

-

Ogashawara, Igor, Marcelo Pedroso Curtarelli, and Celso M. Ferreira. “The use of optical remote sensing for mapping flooded areas.” International Journal of Engineering Research and Application 3.5 (2013): 1-5.

-

Peters, Robin, and J. P. D. Albuquerque. “Investigating images as indicators for relevant social media messages in disaster management.” The 12th International Conference on Information Systems for Crisis Response and Management. 2015.

-

Planet Team (2017). Planet Application Program Interface: In Space for Life on Earth. San Francisco, CA.

-

Schnebele, Emily, et al. “Real time estimation of the Calgary floods using limited remote sensing data.” Water 6.2 (2014): 381-398.

-

Ticehurst, C. J., P. Dyce, and J. P. Guerschman. “Using passive microwave and optical remote sensing to monitor flood inundation in support of hydrologic modelling.” Interfacing modelling and simulation with mathematical and computational sciences, 18th World IMACS/MODSIM Congress. 2009.

-

Wedderburn-Bisshop, Gerard, et al. “Methodology for mapping change in woody landcover over Queensland from 1999 to 2001 using Landsat ETM+.” Department of Natural Resources and Mines, 2002.

-

Woodley, Alan, et al. “Introducing the Sky and the Social Eye.” Working Notes Proceedings of the MediaEval 2016 Workshop. Vol. 1739. CEUR Workshop Proceedings, 2016.

-

Yang, Yimin, et al. “Hierarchical disaster image classification for situation report enhancement.” Information Reuse and Integration (IRI), 2011 IEEE International Conference on. IEEE, 2011.